ChiefRunningPhist

Well-Known Member

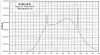

Ya I thought that too, but I settled on the reasoning that there really is little difference between a qtr of a watt per m2 (10.6 UV index) vs an eighth of a watt per m2 (5.3 UV index), and that a plant will see somewhere in that range (well actually anywhere from 0.0mw/m2 -> 250mw/m2+) of UVB regardless what it does to humans. It's a way of obtaining a dosage range from observed and documented UV radiation. If you believe the plant grows best under the sun (me) then using the suns documented UVB doseage as a guidline might bring you closer to what the plant wants. I'd say ideally 1/4 watt of UVB radiation per m2 would be the max you'd want, and with a peak of 305nm - 310nm and a WV range from 285nm to 325nm.Im not sure if the erythemal action spectrum (how much of what wavelength burns your skinn) can be exactly translated to a plant response. Skin and leaf is not the same. Also, @Randomblame was talking about having the right balance between uva and uvb. Apparently he had loss of yield with too much uvb.

But i still find it and intriguing approach....

I'm not sure what the correct ratio of UVA:UVB should be. It would be great to see some studies. I know when it comes to the sun that it's about a 95:5 UVA:UVB ratio, so I think as long as the UVB is present you really can't have too much UVA, but too little UVA for the UVB present could be an issue. I'm trying to go with 4× the UVA at least. Its nowhere near the 19:1 ratio of the sun though. Not that it matters, but I think the solacure bulbs look close to a 4:1 ratio, maybe 5:1...

I may find I need to add more UVA. Idk..

Last edited: